DynTypo: Example-based Dynamic Text Effects Transfer

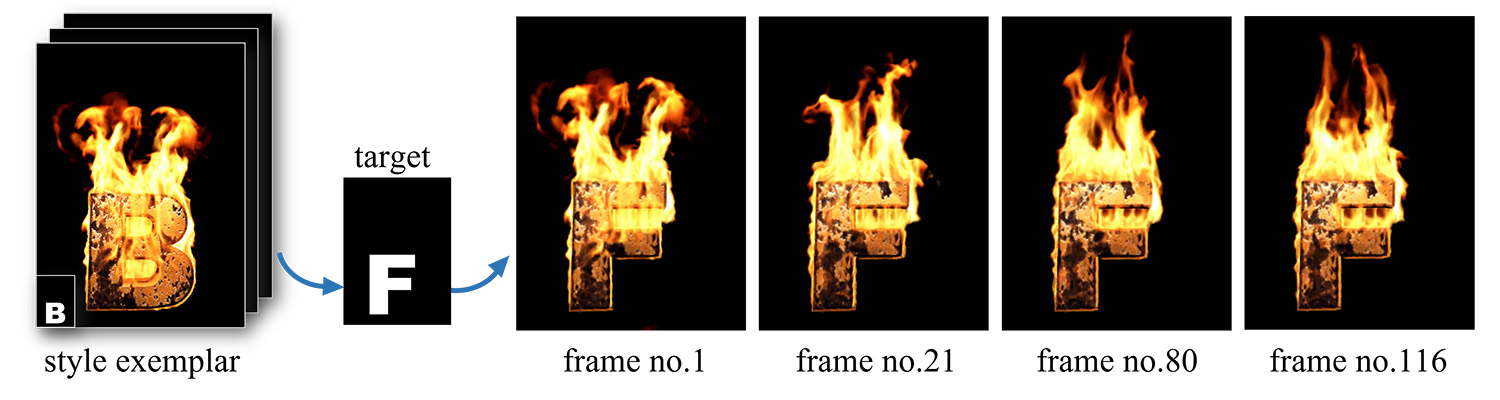

Fig.1 The dynamic text effects from an exemplar video (far left) can be transferred to a target text image (bottom) using our method.

Abstract

In this paper, we present a novel approach for dynamic text effects transfer by using example-based texture synthesis. In contrast to previous works that require an input video of the target to provide motion guidance, we aim to animate a still image of the target text by transferring the desired dynamic effects from an observed exemplar. Due to the simplicity of target guidance and complexity of realistic effects, it is prone to producing temporal artifacts such as flickers and pulsations. To address the problem, our core idea is to parallel optimize the textural coherence across all keyframes to find a common Nearest-neighbor Field (NNF), which implicitly?transfers motion properties from source to target. We also introduce an improved Patchmatch by employing the distance-based weight map and Simulated Annealing (SA) for deep direction-guided propagation to allow intense dynamic effects to be completely transferred with no semantic guidance provided. Furthermore, generated dynamic texts can be seamlessly embedded into any static backgrounds or video clips by poisson image editing. Experimental results demonstrate the effectiveness and superiority of our method in dynamic text effects transfer through extensive comparisons with state-of-the-art algorithms. We also show the potentiality of our method via multiple experiments for various application domains.

Supplemental Video

It is recommended to switch to 720p for better visual results.

Experimental Results

Dynamic Text Effects Transfer

Citation

@inproceedings{men2019dyntypo, title={DynTypo: Example-based Dynamic Text Effects Transfer}, author={Men, Yifang and Lian, Zhouhui and Tang, Yingmin and Xiao, Jianguo}, booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition}, year={2019} }